Intro

The other day a friend that owns a small business asked me for help. He needed to download all photos from a set of URLs of his own web-page, but there were hundreds of URLs, in each a few photos, and he didn’t really know how to go about it “quickly” (as always, it was somewhat urgent…).

So I skipped lunch that day, and lent him a hand, as it seemed rather straightforward…

The task

191 URLs in an Excel file. Each URL points to a similar (public) page, with different persons (“models” more specifically), and photos of that person.

Objective: Download all those photos, keeping track if possible of the person name (also available on the corresponding page).

Finding the right filters

A classic trick when thinking about crawling a web page is to look for the right filters in the web page code. That is: Can you use a specific tag or tag ID, or maybe a tag to be later filtered with a regex?

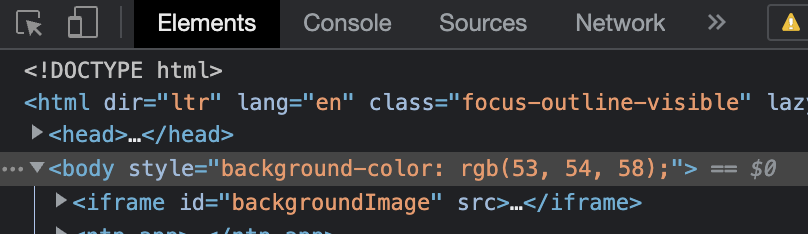

In this case, using my usual browser, pressing F12 (to get to the elements inspector, using the mouse pointer, very handy), I looked through the page and concluded that, for each of the URLs (all the same page format):

- The name of the model is the only “h2” tagged text in the page

- The images all had the <img /> tag (of course), but those that were relevant ALSO had a text in the tag, whereby the image source was always under a folder that contained a folder named “formidable/”

Coding the crawler

The URLs were in an Excel file. I could have saved it as CSV, but having the readxl package handy, why bother 🙂

Then I started thinking about packages such as httr, XML2, and so on… But in the end I only needed the rvest package for working with the web page.

What I published a few minutes ago is a somewhat cleaner version of what I put together the other day, but the spirit is the same.

A couple of things happened (as it always does):

- Some Persons were in fact repeated, so in order to avoid mixing and/or overwriting things, I assumed a duplicate name meant a duplicate set of photos, and skipped it.

- Some Persons had a weird Unicode character or similar issues, I had to encode that to write the output folders’ names, otherwise the whole thing broke midway sometimes.

- Some photos links were in fact returning 404; I had to add a control for that, for graciously continuing the work.

But all in all, it was a pretty straightforward exercise.

Conclusions

What was new there was the downloading of files (images in this case). Being used to work with HTML text, I never had had the use for the “download.file()” function. Quite straightforward, and a cool (albeit very simple) addition to my bag of tricks.

Other than that, the whole thing took less than an hour. I still got out and bought myself a sandwich that day, after all 🙂