A short entry to mention the actual observable/understandable progress.

So you have a few nodes on a network (with some considerations). You can act on two variables for each of the nodes: Their protection (beta) or their quickness to clear an infection (mu). Improving both parameters for a node reduces the risk of infection for itself and the network. (Think epidemic, or computer worm…) (Note: In this very first but functional version, either you improve the beta or leave the default (worse) beta, and you do the same for the mu, and repeat for each node of your network).

You can do that but you don’t have money to spend for all the nodes on both variables (ideal scenario).

What do you do?

Well, I could come up with my own theories, but what my program does is… It gives me its own recommendations.

Here is one I felt made rather obvious sense and would be easy to illustrate the value of the project.

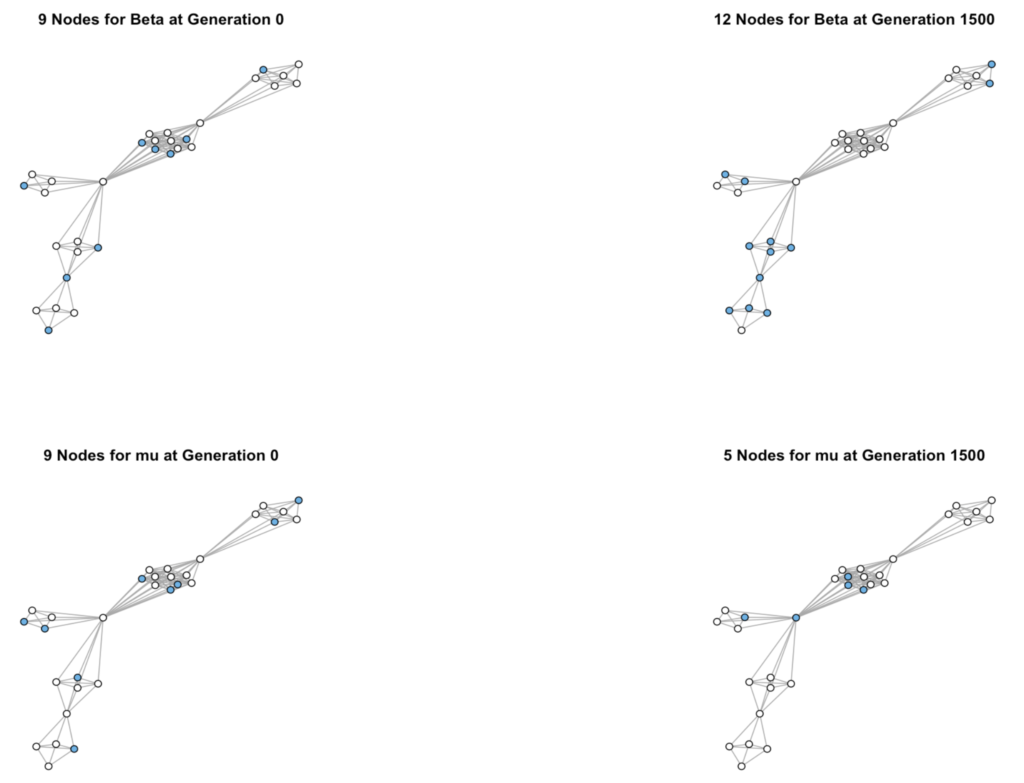

On the left, assignments of improvements at random (blue means: apply measure to node), before trying to optimize the use of your limited budget.

On the right, the proposal to “best distribute the budget”:

The GA chose to distribute the limited resources ON OPPOSITE subsets of nodes! It chose to either protect or detect, rarely do both. This complementary approach seems to be working best (given a gazillion other pre-set parameters, that is).

Moreover, for the chosen thresholds (that could vary), it appears that for this particular graph (the thing doesn’t work equally well for any graphs, but that’s for my dissertation), it could be better to focus on protecting the perimeter and monitoring the center! (Think it through: If an infection spreads, you want central nodes to ensure detection before the infection spreads from one side to the other…)

I couldn’t say whether this is THE actual best option (it probably isn’t), but if you look at it (and understand the gibberish I just tried to convey) you could hopefully support such a solution!

And so not only it works, it also makes sense!

More importantly

Most important to me here is, the concept is valid. There is a basic (but bad) way of distributing your limited resources, and then there IS a better way, and from all my initial results, this is consistently true.

Let me put it in Cybersecurity Operations words, with an example I hope can be understood by most:

Can’t patch all your servers? Maybe something like this could tell you how to choose which to patch, and which to ensure you have monitored in your SIEM, based on a stochastic simulator optimisation approach (a.k.a. using numbers and probabilities to search for a good solution)