Yes, I’m stepping away from pure coding concepts once again (sorry, I know the name of the Blog can be misleading sometimes… Think of all these sysadmin entries as prepping a working environment for our future data analysis exercises ;))

This bit is more about general thoughts & concepts on maintaining a running environment as it grows more and more complex, be it my simple “home lab server” or in “real life”. No R code for today, but I thought the ideas here were relevant. I hope you won’t mind.

The sysadmin

So first off, remove unused packages (I don’t really get why I would want the pastebinit package on my server, but maybe I should have gone for another Ubuntu version, instead of Server 20.04…)

One thing is to get up and ready fast (Ubuntu has always installed just fine for me), and another is to keep unnecessary services and packages (the more stuff is in there, the more “attack surface”). So I removed a few things a couple of weeks ago (only those I was comfortable wouldn’t break anything…):

pastebinit, bind9-host (and al.), postfix (if I need it later, I’ll install it again).

Then apt-get autoremove to get rid of unused dependencies.

Note: While at it, remember to apt-get update and dist-upgrade from time to time. I personally like to add that to my crontab. If anything breaks, this is a lab, so not too worried about it.

OK. Another thing: fprobe had been running non-stop since I installed it. That takes up some space. So I added an entry in my crontab to delete anything created up until midnight. Yes, that limits greatly future analysis, but I’ll get to that (I won’t probably really need all the netflows for analysis, as long as I am capable of storing some sort of summary). And if I really need to keep them all, I can always remove the entry from the crontab, this is a lab after all…

A few minutes looking at the output of /var/log/syslog, then htop, then lsof…

Through lsof, I found “ModemManager”, and I really don’t know why I need it, so I go on and disable it.

Same for snapd (what I have read about it sounds nice, but as of yet I have never used it… Off you go). That one was a bit trickier, but I found help here:

https://www.kevin-custer.com/blog/disabling-snaps-in-ubuntu-20-04/

Anyway, I could go on, but that’s boring to you, and I’m pretty happy with the overall system status for now.

The security-conscious

I check from time to time that there is nothing weird listening for connections on my server:

netstat -pan | grep -i listen

Then although one is obviously never 100% sure, and just in case, I go the extra mile and add a rootkit check with rkhunter (well, if this is going to be a security check at home, I might as well do that too):

apt-get install rkhunter rkhunter --propupd rkhunter --check

One can then check the results in:

/var/log/rkhunter.log

I might want to process this output at a later stage using my R dashboards, who knows…

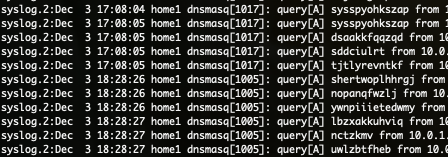

Side note: Now that I have my server intercepting all DNS queries from my laptop(s) and mobile phone, I thought it would be good to check a bit what was happening there…

I found a few preoccupying entries, of random-looking names with no TLD:

egrep "query\[A\] [a-z]+ " syslog.*

That was a first for me. So limiting all my connections as much as I could, I tried to reproduce the thing… Thank Goodness, it “just” Chrome testing DNS connectivity for single word searches in the navigation bar (or so I found). Anyway, everything seem to be OK. Next up, a reboot to double check I didn’t break anything, and as little effort as that all was, I feel better and liberated a bit. I can now re-focus on coding.

These are just two or three things from the “top of my head” that I did a few nights ago, and thought I would share, for the following reason…

My general thoughts about “Muda”

There is always room for improvement, as tiny as it might be (I guess this could be associated with the “kaizen” concept). And it’s good to stop and do some tidying up from time to time, as (I believe) it makes things more sustainable in the long run.

I also believe that the concept of “technical debt” goes way beyond coding practices (I definitely do NOT consider myself an “expert” in either systems administration or coding, by the way).

What is “technical debt”? Glad you asked. Let me start with a generic idea:

In a company, creating new functionality & services for the clients, increasing again and again the organisation size and hierarchy complexity, adding bureaucracy to the processes, well… All that might be necessary. But I do believe it is good practice to stop producing new things and sometimes start reducing. (If I ever work on my own or create a startup or have any control on the work I do, I believe I would institutionalise one month a year in which no one is allowed to buy any new server or software, and instead have everyone work on taking better advantage of what is already in place, and/or getting rid of what is unnecessary). For example one might have to input the hours of their work-day in TWO different systems, for “productivity control” purposes. It’s not that it’s a big deal. And I get that companies need some form of control (it’s even required by law in some places…). But why not consolidate the two reporting systems into one? (By the way, true story.)

I think these “unnecessary things” is what is called “muda” in Japanese, a concept used at the Toyota plants. Waste, if you wish. (I do NOT know japanese; but these guys came up with – or made popular – the concepts of kaizen, muda, and the likes). As per the using what you already bought, I don’t know the japanese term for it, but I call it common sense. Why buy a new product to cover things that you might begin to cover with functionalities you have already bought through another product, but you never have taken the time to maximise the value of the older product? Why not use all the functionalities you have in the first place? Anyhow.

Not only at work

Although I don’t always like to do the dishes, I still do it, as it makes for a better living environment. This is not “producing” anything, no, but it helps (at least in the long run). Sorting files and ordering emails (maybe a bad example) and folders is not “productive” and it doesn’t produce “value” per-se. But just the same, I do it (from time to time, that is): I stop “producing” and start re-ordering, reducing, zipping, deleting.

One could (maybe once a year) evaluate the need for that Facebook account or full-fledged TV channels pack, consider that one has not used them in a long while, and reduce right there: Delete the Facebook account (do not worry, these guys own WhatsApp, so they won’t be missing much my personal data). Renegotiate with the ISP and get rid of the phone landline (I never knew my own phone number), keeping only the Internet. And/or switch ISP to pay less, for the same value.

Let me tell you: These things really do make me feel better.

And I don’t need to go fully minimalist in the strictest sense. Every little improvement is great.

Back to coding

Maybe revisiting code I have created years ago, and changing it to use the newly found concepts of “Functional Programming” (I didn’t even know the name for it! Although I had already started using it – I am learning :)), using lapply and unnamed functions instead of for loops… Maybe this is not actually creating any new dashboard or running any new algorithm on some dataset.

But we are getting to the “technical debt” concept in its proper context (i.e. “coding”):

I have created some scripts of more than 2000 lines. To many of you, this is probably “peanuts”, I get that (you need to understand, coding is not by far in my main job description – at work I usually do it because I hate doing certain stuff manually (e.g. creating reports) too many times if I can automate it a bit. Oh, and yes, I use R to do some reporting :)).

When I came back to those scripts – a year or so later – to add some new functionality (e.g. a new graph), and as I have improved (ever so little) my R Kung-Fu, well: the past code looked very bad.

Instead of trying to fit the new functionality into what the script did, I chose to postpone it a little, and spend a few of hours re-visiting that code. A few functions later, cleaning up some commented code that had been sitting there for a year, some (VERY basic at the time) sapply replacing 5 identical sets of calculations on 5 very similar paths for 5 dataset from 5 sources… You get the idea.

Well I managed to downsize it to just above 700 lines. Not that impressive, I know, but I thought it was still great improvement (to me, it was ;)). I also made it MUCH faster (the mix of lapply & rbind.fill was a revelation at the time).

Did I loose my time? Let’s see: We might use the script about 3 times a week, at least two of us in my team. It took about 3 minutes each time, and now it takes about 20 seconds (with the new functionality, which took me very little effort to implement). So let’s round down to 2 minutes saved, times 3*2*4 executions a month: In a month, I’d saved 48 minutes. In three months I’d have made up for the “lost” time revisiting the code. This, to me, is a very simplified version of working on “Technical Debt” example.

Conclusions

You might have noticed from last week post and this one, that I have started thinking about complex software with many pieces, objects, functions, API calls, servers, etc… That’s a whole different beast from simplifying one script as explained above.

But the same philosophical ideas would probably apply, right? As it turns out, my suspicions were correct, if I am to believe the novel “The Unicorn Project” (by Gene Kim), which I read a few weeks ago (and which motivated me to write the second, more philosophical part of this post).

If this post rang any bell for you, I would definitely recommend you check out that book (No, I don’t know the author, I don’t gain anything from recommending it… I just enjoyed it ;)).

And I promise to make the next post about using R!