For the past two Blog entries, we spent time preparing for today:

We want to use a Postgres SQL Database backend to work with our JSON data, from R.

Before we continue…

We’re still missing one piece: the table(s) in the Database. Let’s tackle that very quickly for today, as the focus will be on R, not on the Database.

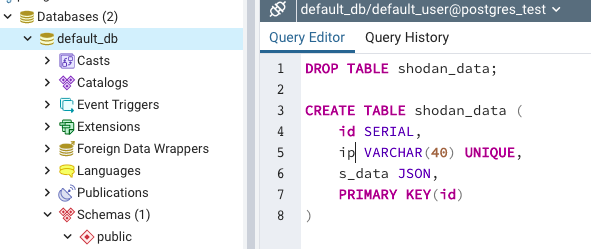

Let’s keep it VERY simple: Let’s take our Shodan JSON result for an IP and store that into the DB. So our basic use-case requires a table for that. In SQL Code:

CREATE TABLE shodan_data ( id SERIAL, ip VARCHAR(40) UNIQUE, s_data JSON, PRIMARY KEY(id) )

The “ip” format part might seem weird if you’re only considering IPv4, but IPv6 needs some more space. Anyhow, it’s just a string, from the database perspective (which might be improved upon in some cases, but that’s beyond our goals for today).

In PgAdmin, you can use the “Query Tool” (from almost anywhere in the rigt-click context menu):

A couple of details: Bare in mind, Postgres will transform uppercase variable names to lowercase behind the scenes. And that’s relevant, as you won’t be able to use that variable for queries, if you’re unaware…

On the other hand, the screenshot above aims at also showing: our default_user indeed sees it’s own database only. Which is a good thing.

Edited 2021/06/20: As it turns out there is a better column type for JSON contents, the “JSONB”. For the sake of this exercise it didn’t really matter, but if in doubt, you’ll probably want to go for JSONB instead of JSON. (The B apparently stands for “better”, so…)

Well, let’s now get to the R part of things

OK so we have a database, a user to connect to it, and a table ready to receive data.

The R code for today is on my GitHub account, as usual.

Let’s go through it swiftly:

First, we’ll use the RPostgres package for the DB Backend interaction.

library(curl) library(jsonlite) library(RPostgres)

Second, as we will be using that quite a lot, I create a connection function tailored for this script, which will simply wrap the connection details to our particular setup.

establish_con <- function() {

dbConnect(RPostgres::Postgres(), dbname = "default_db",

host = "postgres_test",

port = 5432,

user = "default_user",

pass = db_t_pass)

}

Note: There are better ways to authenticate and/or use the connection details in R, but for the quick & dirty demo, this will have to do. The password, you’ll notice, is actually coming from a variable, which was loaded from an external file. NEVER put your passwords directly into your source code. Just in case.

OK. So then we get our JSON data in an object, like so (copied from our past examples):

conn <- curl(paste0("https://api.shodan.io/shodan/host/",

nslookup(hostname)[1], # Or you can lookup any IP you wish...

"?key=", s_apikey))

s_q_result <- readLines(conn, warn = FALSE)

close(conn)

You might notice that this time around, we don’t do “fromJSON” on “s_q_result”. That’s on purpose, this time we want to keep the JSON as-is, and put that in our database.

# Writing into Table directly from a data.frame... con <- establish_con() ## DUMMY EXAMPLE, the IP should be real... dbWriteTable(con, "shodan_data", data.frame(ip = "my IP checked", s_data = s_q_result), append = TRUE, row.names = FALSE) dbDisconnect(con)

This is one way to go (and pretty cool, by the way!). So if you have a dataframe that conforms everything required by the corresponding table in the Database, you can simply “WriteTable” it!

Careful: column names must be perfectly identical. Data formats will be checked by the RDBMS. And other cool stuff that will help us avoid some basic mistakes.

For instance, a wrongly-formatted JSON object will NOT get past basic controls for inserts. SO if you construct a JSON object from scratch and forget a double quote (or to escape it), well things won’t “fly”.

Another way to go is to INSERT into our table new entries:

# Adding data to a table, using INSERT:

con <- establish_con()

t_query <- "INSERT INTO shodan_data(ip, s_data) VALUES ('anotherIP', '{\"object_test\": \"test\"}');"

res <- dbSendQuery(con, t_query)

print(res)

dbClearResult(res)

dbDisconnect(con)

Now that will fail if say we put a “UNIQUE” constraint on the IP address, for instance, if that IP is already in the table. Again, this is GOOD as it protects us from screw*** our data.

And once we have data in there, we might want to update instead of recreating everything, and so we’d go the traditional way, possibly:

# What if you want to change some value(s)?

con <- establish_con()

t_query <- "UPDATE shodan_data SET s_data = '{\"object_test\": \"change test\"}' WHERE ip = 'anotherIP';"

res <- dbSendQuery(con, t_query)

dbClearResult(res)

dbDisconnect(con)

Getting back our data

All is good, we can insert stuff. But can we get it back?

For basic queries, sure:

con <- establish_con() t_query <- "SELECT * FROM shodan_data" res <- dbSendQuery(con, t_query) res2 <- dbFetch(res) # For potentially later use... dbClearResult(res) dbDisconnect(con)

But what about nested JSON stuff? That’s a bit more tricky (and I had to look it up, as it was my first time around Postgres & JSON stored objects…). But, after a while, it’s a bit more intuitive:

con <- establish_con() t_query <- "SELECT ip, s_data->'country_code' AS country_ISO, s_data->'domains'->>0 AS first_domain FROM shodan_data" res <- dbSendQuery(con, t_query) res2 <- dbFetch(res) # For potentially later use... dbClearResult(res) dbDisconnect(con)

And it works!

Final note here: from the last bit of code, “res2” will contain a Dataframe. Which is convenient for us to manipulate.

Conclusions

Armed with the past few entries of the Blog, we’re armed and prepared to:

- Install a container-based Postgres SQL RDBMS

- Install and connect a container-based Database client (PgAdmin in this case)

- We already had an RStudio container, but now we’re able to use it to connect to our database and work with it.

- And as a bonus, we can actually store and retrieve JSON-formatted data. “Cherry on the icing on the cake”.

Now we’ll be able to fetch our JSON data, and sometime we’ll use the jsonlite package functions, like “fromJSON” and “toJSON”, to manipulate the data from within our script. Where the balance will be, well I guess that will depend on each use-case…

But I’m happy: A new important topic covered: SQL Databases. In our case, JSON-supporting well known RDBMS. And it is an important thing to know. So I hope the last few entries will help you begin your journey there (but it doesn’t replace Coursera’s Dr Chuck “postgres for everyone”, nor would I in my wildest dreams expect to compete there ;)).